Web Scrapping and Regular Expression - 1

Introduction

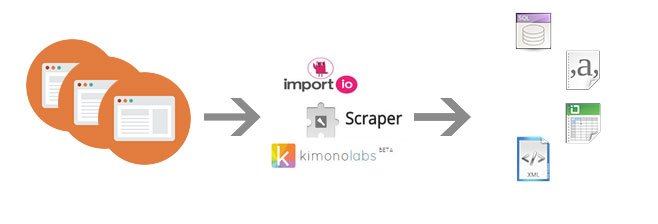

This Quick tutorial is to use an web scrapping example to introduce how to use BeautifulSoup and Regular expression to mine the web data quickly and easily. For details, Please refer to the BeautifulSoup document.

BeautifulSoup

API

- Requests

Import requests package

1

import requests

requests.get():

using get(url) function from requests package, it allows us to send a request to the url webstite.

It returns the response from that website, but this response is not HTML file, but a response packet from server

response.content:

By calling content after we get the response packet from get(), we can extract the HTML page from the packet and analyze it.

- BeautifulSoup

soup = BeautifulSoup(html …)

1

2from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'html.parser')This creates beautifulsoup object and parse the HTML text with a html parser to store html content to the beautifulsoup object.

.tag_name

After obtaining a beautiful soup object of a html file, we can use soup.tag_name to get name of current tag.

Example:1

2

3

4

5

6

7

8

9

10

11

12

13body = soup.b

print(body.name)

~~~

This extracts the tag *\<b\>*

<br>

+ *t.get_text() / t.text*

After extracting a tag, we can use .text or .get_text() function to extract all texts value under current tag

~~~Python

soup = BeautifulSoup("<html><h1>Head 1</h1> <h2>Head 2</h2><html>")

soup.get_text()

#or

soup.textIt returns “Head 1 Head 2” directly

t.attrs[“href”] or t[“href”]:

In HTML, every tag could have its attributes inside the tag. We can simply use tag.[“attribute-name”] or tag.attr[“attribute-name”] to extract the attributes

This example extracts the href link from tag <a href=…></a>

Example:1

2

3

4

5

6

7html = "https://www.baidu.com"

soup = BeautifulSoup(html, "html.parser")

# find a tag call "a", <a href= ....>

tag= soup.find("a")

print(tag["href"])

#or

print(tag.attrs["href"])

t.contents and t.children:

A tag’s children are available in a list called .contents- .contents:

it stores all children into a list - .children:

it is a list_generator type object, we can not get child directly. We should use iteration method to get child from childrens1

2

3

4

5

6

7

8

9head_tag

# <head><title>The Dormouse's story</title></head>

head_tag.contents

#[<title>The Dormouse's story</title>]

title_tag = head_tag.contents[0]

title_tag

# <title>The Dormouse's story</title>

title_tag.contents

# [u'The Dormouse's story']

- .contents:

.string:

If current tag doesn’t have children tags, but just have a string, then we can call .string

If current tag doesn’t have text, but have children tags, we can not call .string (it returns nothing)

Example:1

2

3

4

5

6head_tag

# <head><title>The Dormouse's story</title></head>

head_tag.string

# Nothing

head_tag.title.string

#The Dormouse's story

find(…):

It returns the first tag or string that satisfies the requirements in input

Example:1

2tag = BeautifulSoup("<html> <a>text</a> <a>text2</a><html>")

tag.find('a', string="text")It returns the first tag with type of <a> which contains string “text”.

soup.find()

find_all()

Similar to find(), but return a list of all tags that satisfy requirements

find().find_next()

Find the first tag and then find the next tag that satisfies the requirements inside current tag1

2soup = BeautifulSoup("<html><h1>Head 1 <a href=www.baidu.com></h1> <h2>Head 2</h2><html>")

soup.find('h1').find_next(attrs={'href':"www.baidu.com"})it returns: <a href=”www.baidu.com"\>\</a>

Example: Capture roster of football team in ESPN website

1 | import requests |

It finds all h1 tags in the HTML page and return them in a list

Regular Expression

regular expression is a way to find any string pattern that match the expression we design. It helps us find the string pattern easier.

API

Import Regular expression package

1

import re

*

Causes the resulting RE to match 0 or more repetitions.Example: ab*: match when 0 or more b follows a+

Causes the resulting RE to match 1 or more repetitions.Example: ab+: match when 1 or more b follows a?

Causes the resulting RE to match 0 or 1 repetitions(…)

Matches whatever regular expression is inside the parentheses, and indicates the start and end of a group[…]

Used to indicate a set of characters

[a-z]: characters from a to z

[a-zA-Z]: characters from a to z and from A to Z

[a-zA-Z0-9]: characters from a to z and from A to Z and from 0 to 9A|B

Match either A and B pattern(?=…):

Matches if … matches next, but doesn’t consume any of the string(?!…):

Matches if … doesn’t match next.(?<=…), (?>…)

Matches if the current position in the string is preceded (the first one) or after (second one) by a match for … that ends at the current positionre.search(“(text)”, input):

Search a “text” pattern from input. The first input is regular expression

It return a re object*re.compile(“([a-z]text)”):

compile the regular expression object. This regular expression object can use match(), search() without inputing regular expression parametersre.group(0):

It extracts matched string from re object and return the first pattern.

Example:

1

2

3

4

5

6import re

s = "Hello World. Hello Everyone"

# match a set of character a-z or A-Z that repeat 0 or more before pattern "one".

obj = re.search("([a-zA-Z]*one)",s)

if obj:

print(obj.group(0))

This example return pattern “Everyone”

Example to Find Specific pattern from HTML file

Search for all strings that contain pattern “SC” and end with “SC”

1 | import requests |

Further work

We can mine more information from any websites. However, we also need to know the architecture and tag names or even some functions in webpage in the website we want to mine.

To get to know how to know what functions or tags we have in the BeautifulSoup object, we can use a package called inspect in python to explore the structure of beautiful soup object

Reference

[1] BeautifulSoup

[2] Regular Expression

[3] Inspect package in Python